Shifting Left in Data Quality: Reflections and Experiences

Breaking down silos between software and data teams to solve quality issues at their source

I've been noticing several posts on LinkedIn and Medium lately about "shifting left" in data quality, and it got me thinking about our industry's journey. How did we get to this point where we're talking about moving quality checks earlier in the data pipeline? Let me share some reflections on this concept and how it connects to my experiences throughout my data career.

The Glory Days of ETL

Looking back at data engineering (or as it was often called back then - ETL development), there's a certain nostalgia for the old days. First pipelines for reporting were usually based on production databases (in good scenarios, replicas or DB backup dumps). While things were done in the ETL way, much processing was optimised in memory. When loading would happen, we'd get validation errors (type mismatches, conditional triggers, i.e., types not matching, etc.).

This ensured that pipelines were rigid and robust before "big data" became a thing. Changes were made thoughtfully, and people would consult data folks (or, during those times, mostly DBAs) before making changes. It wasn't perfect, but it had built-in guardrails.

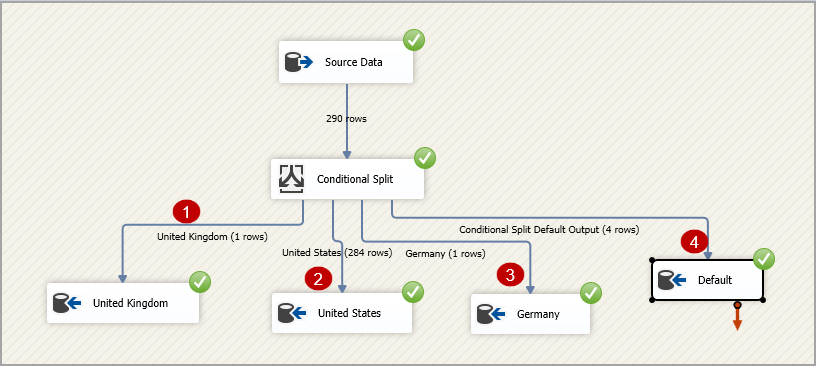

I remember working on SSIS packages back in the day, and when you tried to map a string to an int, it would just fail—no questions asked. The system enforced correctness because it simply couldn't work otherwise. The constraints made our lives harder, but the data was more reliable.

Then Came Big Data...

With the rise of big data, we've started seeing event-driven architectures and events being sent for consumption by data teams, and this is where problems began to appear. Communications were not streamlined; sometime,s software engineers would say, "Oh, it's complicated for us to add this field, but you know what? You can join it with this event later and have it properly." Then suddenly, someone changes something for one or the other, and we have a complete data mismatch.

What I've seen happen repeatedly is something like this:

The product wants a cool new feature

Software engineers build it and start pumping events

Data engineers get tasked with incorporating this data

No one thinks about data validation until something breaks

Data correctness and validity are more critical than ever in the AI age. We should start seeing a push to enforce data contracts, data validation, and more robust pipelines, but we don't. The question is, why?

Bridging the Software-Data Engineering Divide

The disconnect between software engineers and data teams is probably the most significant contributor to data quality issues. It's also the hardest to solve because it's fundamentally a human communication problem, not a technical one.

Understanding the Software Engineer's Perspective

First, let's acknowledge that software engineers aren't maliciously creating “bad data”. They're working within their constraints and incentives:

They're measured on shipping features, not data quality

They often lack visibility into how data is used downstream

They may not understand the impact of schema changes

Their product managers rarely prioritise data quality work

I once worked with a brilliant front-end developer who genuinely asked me, "Why does it matter if this field is sometimes null? Can't you just filter those out?" He wasn't being difficult—he truly didn't understand how that seemingly minor issue could cascade into reporting problems that affected executive decisions.

Effective Communication Strategies

So, how do we get software engineers to care about data quality? Here are approaches I've found effective:

Make it Personal

Show them exactly how their data is used. Create a direct line between the events they emit and the dashboards executives use to make decisions. I've walked engineers through the entire pipeline from their code to the CEO's dashboard. After seeing how their events directly affected strategic choices, they suddenly cared much more about data quality.

Create Shared Language and Understanding

One issue I've encountered repeatedly is that we don't speak the same language. When a data engineer talks about "data quality," software engineers often think we're perfectionists about something that doesn't matter.

I've found success in reframing data quality concerns as:

"Application correctness" (appeals to engineering pride)

"Customer experience issues" (ties to their product metrics)

"Decision reliability" (connects to business outcomes)

Shift Left in Product Planning

Get involved in product planning sessions and feature kickoffs. When data considerations become part of the initial planning, they're less likely to be seen as an annoying afterthought. Some specific tactics:

Request a "data implications" section in product requirement documents

Create data contract templates that product managers can fill out before development starts

Offer to review event schemas before implementation

Automated Testing Alliances

Most software engineers value good testing—leverage this cultural norm. Work with engineering teams to implement event validation in their existing test suites. This builds on something they already value (good test coverage) while ensuring data quality upstream.

Feedback Loops

Create quick, visual feedback for engineers on the quality of the data they produce. Dashboards showing error rates, schema compliance, or null percentages by team can create healthy competition and awareness.

Communication Approaches That Work in Practice

Rather than hypothetical scenarios, here are some practical approaches I've seen work in real organisations:

Cross-functional Data Quality Forums: I've seen companies establish recurring meetings where data, product, and engineering team representatives review data quality metrics and issues. This dedicated space makes data quality a shared responsibility rather than just "the data team's problem."

Problem-driven collaboration: Sometimes, the most effective approach is reactionary. When a data issue causes a significant business problem, use that as a catalyst for change. I was in a situation where incorrect tracking led to a major discrepancy in revenue reporting. This crisis created the opportunity to implement proper data validation processes.

Pre-launch checklists for events: One team I worked with implemented a lightweight validation process for new tracking events. The checklist wasn't comprehensive—it was just basic validation that events contained required fields and followed naming conventions.

Building relationships at the individual level: I've found that cultivating relationships with key engineers who "get it" can be more effective than formal processes. These allies become champions for data quality within their teams.

The Tool Obsession

In 2020-2023, I saw people praising data contracts as saviours, but somehow, that sentiment died out in my LinkedIn stream. Why? My theory is that we love tools more than we love processes and discipline.

Just look at the explosion of data quality tools in recent years:

Great Expectations - For data validation

dbt - With its built-in testing capabilities

Monte Carlo, Soda, Anomalo - For data observability

Deequ, TFX Data Validation - For big data validation

OpenLineage, Marquez - For lineage tracking

Amundsen, DataHub - For data discovery and metadata

All these tools promise to solve our data quality problems, but they often become just another layer on top of the mess. The tools aren't the problem—they're great when used correctly. The issue is that implementing them without addressing the underlying organisational and process issues is like putting a band-aid on a broken leg.

We're also looking for excuses to try the same tools and later "cry" about the changes we must deal with to fix things. It's a strange cycle: We discover a tool, get excited about its potential, implement it halfway, realise it requires actual work and process changes, then abandon it for the next shiny tool that promises to fix everything without requiring us to change our behaviours.

The Reality of Data Engineering Today

In reality, Events are sent with no data contracts, weird legacy setups remain untouched for years, and data teams deal with multiple case statements to handle software engineering issues. Then, numerous custom changes are done manually, eventually messing up production data. Suddenly, the data team's ass is on fire because numbers don't match or look strange.

This typically happens for two reasons:

Crappy data coming in without validation against a data contract or data rules

Manual adjustments were made somewhere, and something wasn't updated properly in the process

I've been in too many "war room" meetings where we're frantically trying to figure out why the sales numbers are off by 15% right before the board meeting. Usually, it traces back to some small change in an upstream system that nobody thought to communicate to the data team.

The Company Size Effect

I've noticed how dramatically company size impacts data quality initiatives. It's almost a completely different game depending on where you are in the company lifecycle.

Small Companies & Startups

If you work at a small company or startup, your data team is relatively small (usually one person), making it even harder to promote quality strategies. On the upside, you typically work in quite a different tempo and environment where "running fast and breaking things" is mandatory.

When you're the lone data person, you're juggling:

Building the entire data stack from scratch

Creating reports that the CEO needed yesterday

Making sense of rapidly changing product data

Educating everyone about data best practices

In a startup environment, introducing formal data contracts can be considered unnecessary bureaucracy when everyone is focused on growth and survival. At this stage, being pragmatic about quality initiatives is crucial—focus on documenting critical data sources and building relationships with the engineering team.

In this environment, perfect is truly the enemy of good. Your initial focus should be on getting the basics right and establishing trusted relationships with the engineering team.

Medium-Sized Companies

As companies mature and stabilise their ideas, data flows, etc., data quality initiatives need to happen (or at least start to happen). Process, established communication lines, and standardised approaches should become part of your data strategy.

This is the perfect time to introduce concepts like:

Basic data contracts for new features

Simple data validation in critical pipelines

Regular data quality meetings with stakeholders

You have enough people and stability to make changes at this stage, but you're not yet so big that changing course becomes nearly impossible.

Enterprise Level

At an enterprise scale, data quality becomes both critical and complex. You have the resources to implement sophisticated data quality frameworks, but you also have:

Legacy systems nobody understands anymore

Dozens of teams are generating data

Complex organisational politics

Multiple competing priorities

Large enterprises spend millions on data quality tools and initiatives, only to see them fail because they didn't address the fundamental organisational issues. The tools get implemented, but nobody uses them as intended, and old habits persist.

Proving Value to Business Stakeholders

The only real problem is proving the value to business stakeholders. This is where I think many data quality initiatives fail—they can't demonstrate ROI in a language executives understand.

Quantify the Cost of “Bad Data”

Track incidents where “bad data” led to:

Incorrect business decisions

Engineering time spent fixing issues

Delayed releases or reports

Customer impact

Put an actual dollar amount on these incidents. When you can say, "We spent 200 engineering hours last quarter fixing data quality issues, costing us approximately $30,000," you suddenly have attention.

Start Small and Show Quick Wins

Don't try to boil the ocean. First, implement quality checks on your most critical data flows, then demonstrate how they caught issues that would have otherwise made it to production.

Speak Their Language

Frame data quality in terms that executives care about:

Risk mitigation

Competitive advantage

Customer trust

Regulatory compliance

Operational efficiency

Instead of discussing "data contracts" and "testing frameworks," discuss "ensuring accurate financial reporting" or "maintaining customer trust through reliable data."

Create a Data Quality Dashboard

Make data quality visible. Create a simple dashboard showing:

Number of incidents caught before production

Time saved by automated checks

Key data quality metrics by domain

Trend lines showing improvement over time

When stakeholders see the positive impact, they're more likely to support continued investment.

What Can Work?

After being in the field for a while, I think I've seen a few approaches that can make a difference:

1. Finding the Right Balance in Team Structure

While embedding data engineers in product teams sounds ideal, it's not always cost-effective or practical. There are fundamental tradeoffs to consider:

Pros of Embedded DEs:

Better alignment with product goals

Earlier involvement in data design decisions

More effective collaboration with engineers

Cons of Embedded DEs:

Data engineers may not have enough work to stay fully utilised

They can diverge skill-wise from the core data team

Loss of standardisation across data pipelines

Reduced knowledge sharing between data professionals

The right approach varies by organisation, but most successful companies use a hybrid model. This centralised data platform team handles infrastructure, standards, and common tools, with domain-specific data engineers who work closely with product teams but maintain ties to the central data organisation.

2. Implement Validation at Every Stage

Validate data at every touchpoint:

When events are first created (on the software engineering side)

When they're ingested into your data lake/warehouse

When they're transformed

Before they're used in analytics

This multi-layered approach creates a defence-in-depth strategy. The validation at the source (in the application code) is particularly critical. When data quality checks are part of the software engineering deployment, you catch issues before they contaminate your data systems.

3. Create Clear Ownership

Make it clear who owns data quality for each domain and dataset. This prevents the "not my problem" syndrome.

4. Build Data Quality Metrics

Track and report on data quality the same way you would any other business metric. What gets measured gets managed.

5. Automate Testing in CI/CD Pipelines

Make data testing part of your deployment process. If the tests fail, the deployment should too. This applies primarily to the software engineering side - data validation should be as much a part of their testing process as functional tests. Quality improves dramatically when data emission is treated as a first-class feature rather than an afterthought.

The Shift Left Movement for Data

The concept of "shifting left" comes from software development, where testing is moved earlier in the development process. For data, this means:

Data contracts are defined before any code is written

Data validation is implemented at the source

Automated testing of data pipelines

Data quality gates in CI/CD pipelines

I've seen this work best when organisations treat data as a product, not just a byproduct of their applications. Things improve dramatically when data has product managers, roadmaps, and quality standards.

Final Thoughts

I don't think we'll ever fully solve the data quality problem—it's inherent to the complexity of modern systems. However, we can improve it by addressing cultural and organisational issues, not just by implementing more tools.

What I've seen work is creating mutual understanding and responsibility sharing. When software engineers understand how their data will be used and why quality matters, they will likely implement proper validation. Similarly, when data engineers participate in application design discussions early on, they can help shape how data is generated and stored.

Shifting left isn't just about tools or processes—it's about breaking down silos and creating shared ownership of data quality across the entire organization. It might be a challenging shift in mindset, but I've yet to see a better approach to solving this persistent problem.

This is really well-written and full of real-world insights and recommendations.