Structuring Data Teams repositories

Or what worked in different stages of data team from my experience

Version Control and I got together only at Wix, where I started as a Data Engineer (later named Data Developer; now I think they have the Data Engineer role back😅). Before, I was doing things like a “real professional” - straight to prod.

From then, I saw very different approaches and had the chance to try my theories in practice later on, so I decided to share my two cents on this.

Remember that all my experience with orchestration is with Apache Airflow, so the structure and patterns I’ve used will be based on that. Also, I’m a fan of dbt. Hence it will also be visible here (and yes, I know there are some downsides to both tools, but it is what it is.)

One man band

When I joined one company, I had the chance to do things from scratch. Since I’ve learned from some mistakes in the past, I’ve started with quite simple but effective.

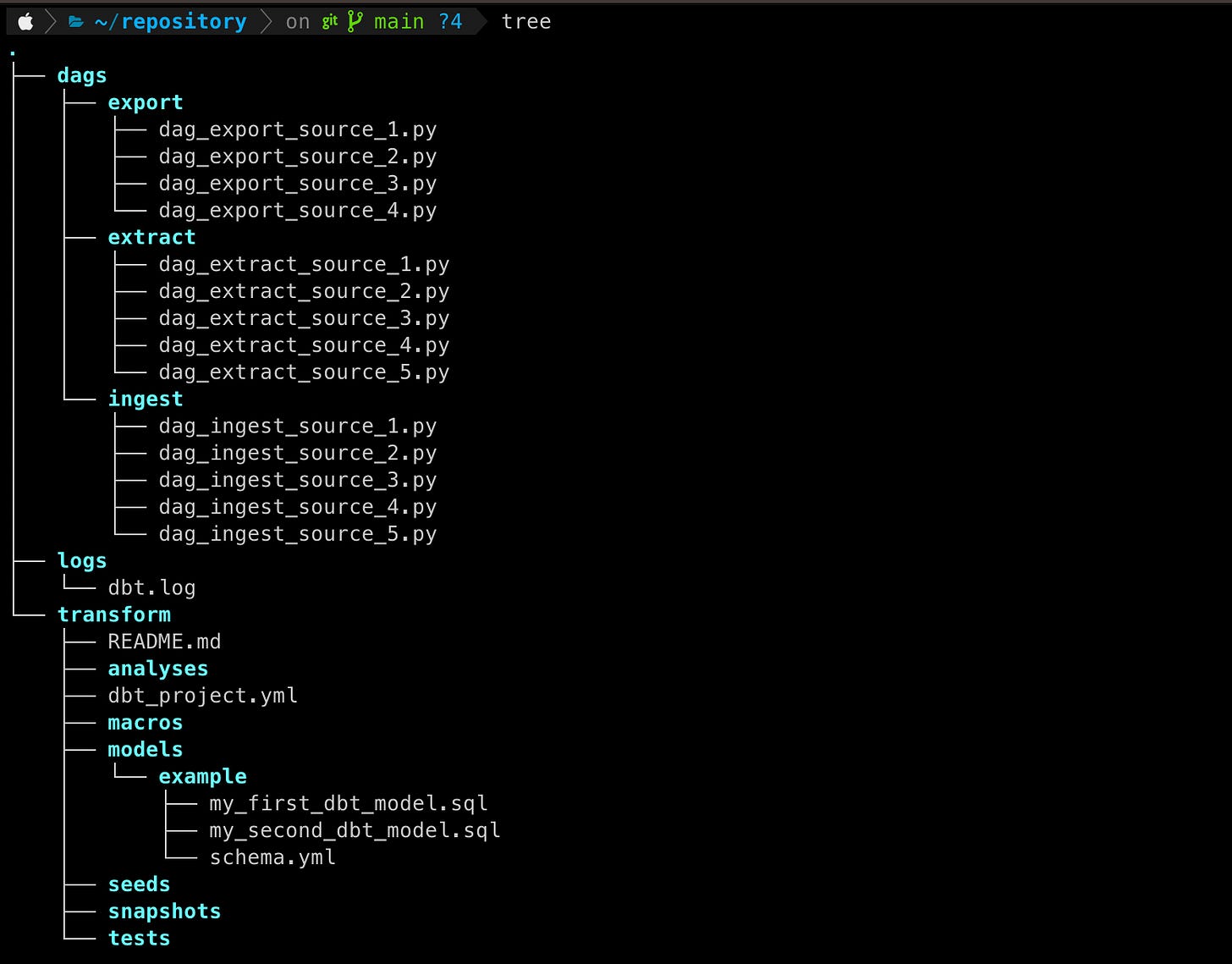

We have a mono repo with a single dbt project running all the transformations and different folders for different operations with Airflow.

When you’re alone, or the team is really small - it’s quick to navigate, but sometimes there is a lot of copy-paste (until you notice it yourself. 🙃). Usually, if you’re starting in the company to build the foundations, you’re quickly pilled with tons of work, and in the spur of the moment, it looks like the quick and easy job to do that way.

Repetition, repetition, repetition

As the company grows, so does the data you have to deal with. Funny enough is that patterns become visible now, depending on if you’re still alone or you have more colleagues running dags, dbt transformations, next steps/approaches might differ, but the result is more or less the same.

Scenario 0 (since everybody knows that lists start from 0). If you’re the sole captain of the ship - all knowledge and connections are in your head; a couple of quick and dirty fixes here and there and you have a ship completely being held on duck tape and some moving parts that are cleansed with WD-40:

Eventually, you realize it won’t scale and decide to fix it and make it modular.

Now if you’re lucky - you have reinforcements. You gladly shove away things from your plate (either the boring, or just connected with people you don’t want to interact with, or maybe both or for whatever other reason). Now two scenarios can happen:

A new person doesn’t ask questions

A new person asks a lot of questions

Now if you have Scenario No 1. - you’ll train him/her with all the copy-paste techniques, and most likely, later rather than sooner, you’ll be in Scenario 1.

Hopefully, Scenario No 2. happens, and you quickly realize the technical debt you acquired during this time.

About Modularity

These things can be solved in multiple ways.

Separate repository for common components with releases and proper setup of installation via pip

Utils folder/Plugins for Apache Airflow

Depending on your setup, you can choose either one, which highly depends on your situation.

Managed Apache Airflow - way easier with plugins. Usually, no to very few things are needed from DevOps.

Self-Managed Airlow - most likely means you can handle infrastructure, or some people do. Go with the separate repository and apply all the best practices for creating Python libraries - versioned releases, proper tests of your components, etc.

So it would look something like this.

Of course, I missed the __init__.py files that are mandatory for the plugins approach, but you get the idea.

The Great Migration

Ok, components are joined, but you still see overlaps, a bit trickier parts between connecting dags of different actions in the same domain. People want ownership and more control over their dags and dependencies.

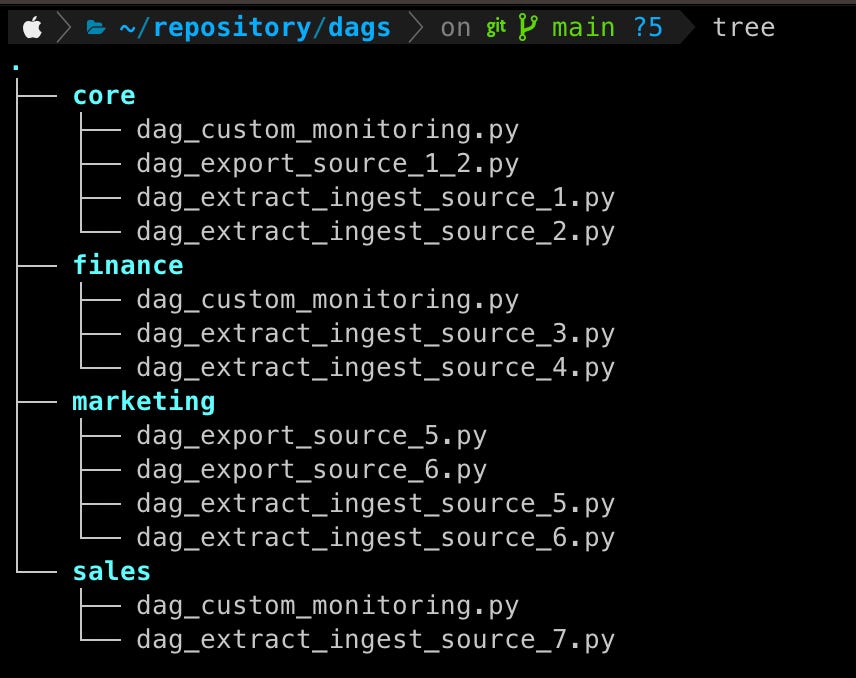

So you create a folder per each team and let them run their things independently while dbt thingies are still running in the Mono Repo approach.

Note: A split like this is quite a sensitive topic. You might end up with one or two dags per folder. It might be frustrating, sometimes it won’t make sense. If it’s a situation like this strongly suggest not force it and do this only when you feel comfortable. We had this discussion in one team where we started in exactly this phase, but it felt half empty. It triggered some discussions maybe we should stick with the split extract/ingest/export.

Your dags folder now looks something like

Data Mesh Awakens

After all ChatGPT, and LLMs hype, I tend not to see in my Linkedin feed people preaching that Data Mesh is the saviour of their data teams.

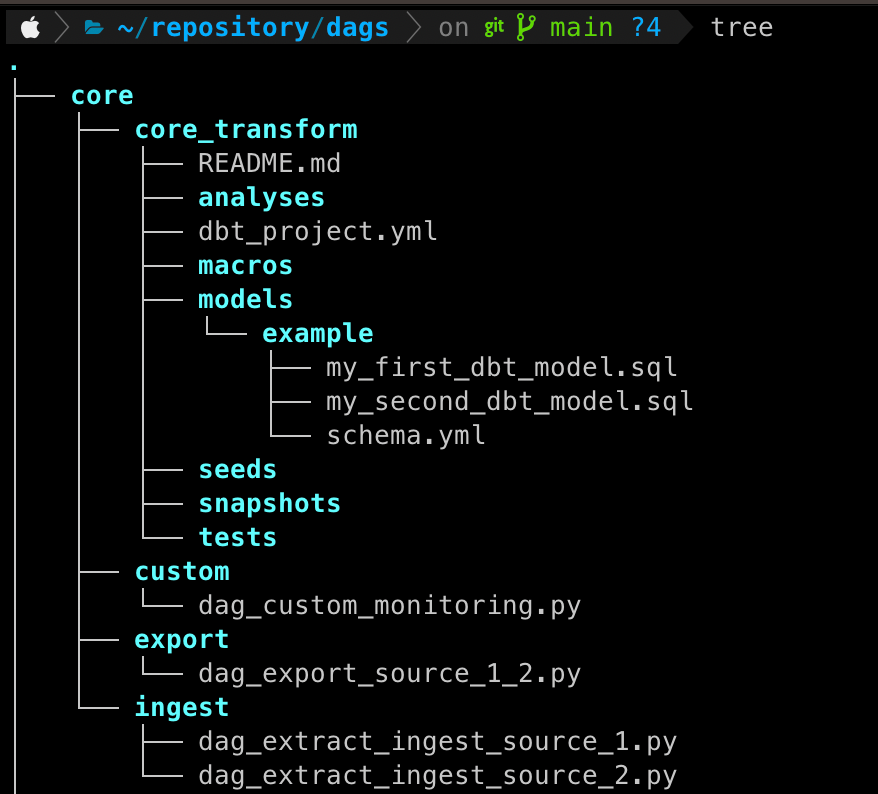

Nonetheless, I see a good place for some parts of the concept in this evolution of your repository.

Depending on how fast you grew and how many data people you have working in the repository, you might split to completely different repositories per domain’s data team or make the adjustments in the existing folders. Here is a snippet of it:

Or you can introduce layers per action:

And here we are. The circle is now complete, we’re kind of back to the one man band situation 😅

I’m not going to preach one or the other approach, just because both have pros.

Ranting and Final Thoughts

Not all of the solutions and approaches might work, and it’s just a summary of the different stages the companies have been in when I joined and what I saw worked. The thing is that, like with running a data team, all changes have to be incremental. Have it all centralized and decentralize little by little where it’s needed.

Having a lot of different dags running on a single Airflow cluster might be painful with dependencies management, so basic rules on introducing new libraries (i.e., Docker Operator or VirtualEnv one) and their versions have to be in place. CI/CD is a must, not a nice to have. If you’re interested in a bit of a different approach to adding new components:

Though I somehow didn’t vibe with this approach, mostly just of its nature, I like to be the headless chicken - go fast and break things.

Having many repositories running in one Airflow Cluster will be a pain to maintain, but sometimes having many small clusters is not costly or efficient, too, with so much administrative overhead. Choose wisely and carefully.