Tale of two: Lazy and the Eager

Nothing much to add. Lazy and Eager DataFrame APIs and what it actually means.

People tend to make comparisons of different DataFrame APIs, and we have tons of those.

Pandas - https://pandas.pydata.org/

Dask - https://www.dask.org/

Polars - https://www.pola.rs/

Spark - https://spark.apache.org/

Vaex - https://vaex.io/

Let’s even add DuckDB to the mix - https://duckdb.org/

How not to get lost and understand what’s measured and how some libraries work. For simplicity's sake, I will talk only about parquet files, just because they’re quite a standard in the data processing area.

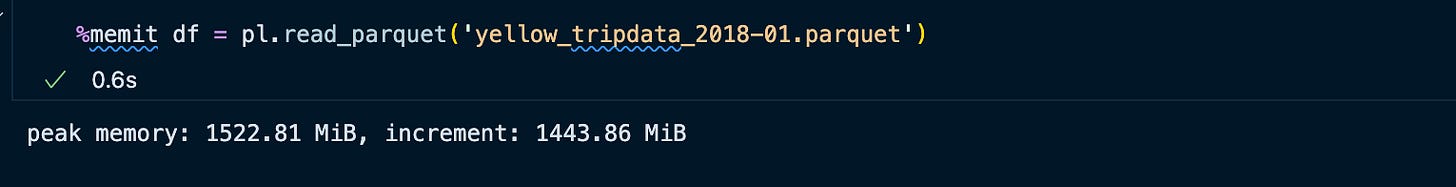

The example file, of course, is legendary Yellow taxi trip data, but only one 2018 January one. Its size is around 124MB - compressed, but if loaded to memory, it’s way bigger.

Eager execution

Executes all of your commands eagerly. Duh!

By eagerly, I mean it actually will do it step by step. Read, aggregate, filter, and write will be executed in the same order as you execute that command.

DataFrame libraries that are eagerly executing:

Pandas - even though it’s using PyArrow to read parquet, it’s still loading it to memory immediately

Polars - read_parquet does eager read

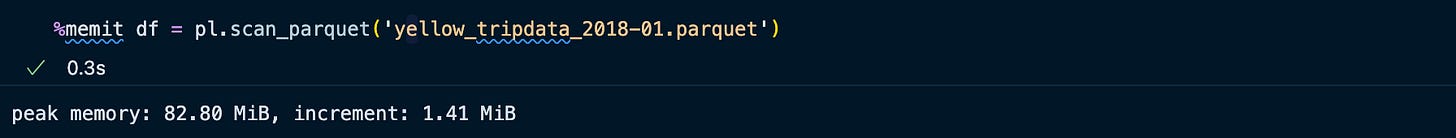

Lazy execution

It works exactly how it sounds. Only when you call an action, only then it will read it to memory and apply some optimizations. Now optimizations are always dependent on the implementation inside the library, but all of the lazy ones have Predicate push-down.

Predicate push-down works by evaluating filtering predicates in the query against metadata stored in the Parquet files.

If we’re talking about other optimization capabilities that are not only Predicate Push-down:

Spark has Catalyst Optimizer

Polars has optimizations

Vaex some are written here

Dask has a method to optimize

DuckDB - it’s a DB engine, of course it has optimizations, why do you think it’s that fast 😉

How it looks with different libraries. Look at the increment part. Peak memory depended on some other things, i.e. objects from the library loaded (yeah, I’m lazy too, no surprise that I’m writing this post 😅) and do things like:

import dask.dataframe as dd or

import vaexNonetheless the idea is quite easily graspable:

DuckDB

Spark - JVM ftw, longest run compared to all other lazy libs 😅

Dask

Vaex

Polars - scan_parquet

Summary

When people say, “Oh, this library read this humongous file in seconds”, question what they have in mind. If it’s simple df.read() it might be because the underlying data frame is lazily executed, and it’s not.

Always compare speeds when the final result is presented; you can only achieve the relevant comparison. Remember that implementation also differs; some chained methods can cause performance degradation (Nice catch by Bilal Bobat here). Comparing libraries should be done by choosing the best practices for each of them.